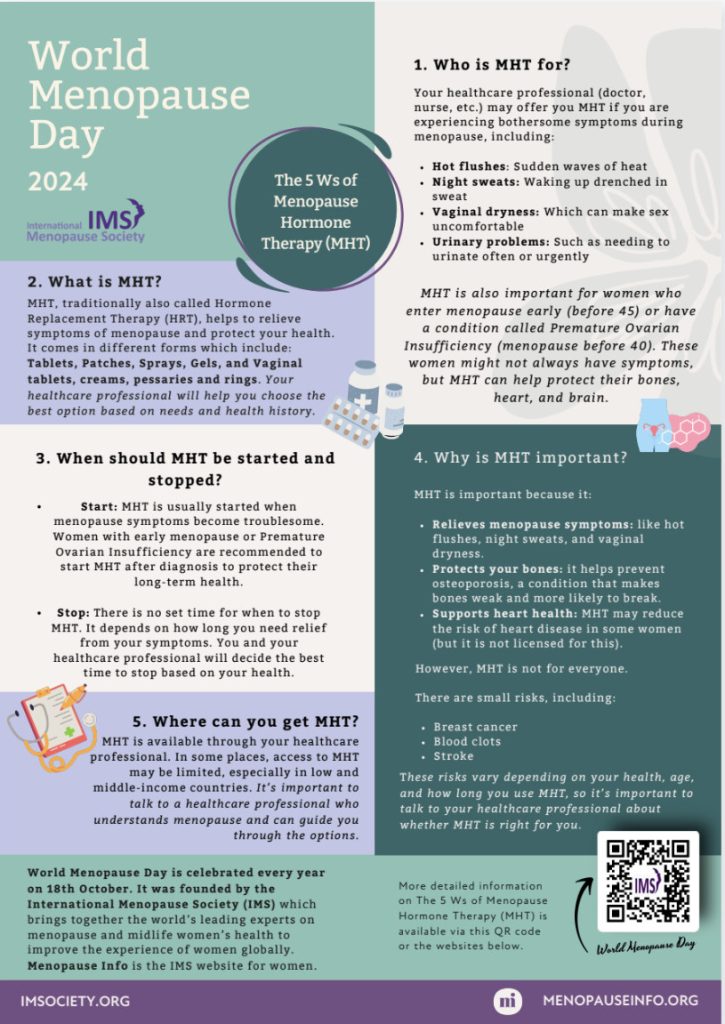

World Menopause Day is held every year on the 18th of October. The day was founded by the International Menopause Society (IMS) to raise awareness of menopause and to support options to improve health and well-being for women in midlife and beyond. The theme for World Menopause Day 2024 is Menopause and Menopause Hormone Therapy Despite many medical advances and a massive increase in public awareness in recent years, there’s still lots more to be done. Each year World Menopause Day shines the spotlight on the challenges facing women during the menopause transition and highlights improvements in research, education and support.

Cancer is the leading cause of death from disease in children and adolescents in Canada. Approximately 10,000 children are living with cancer in this country and 1,500 more are diagnosed every year.

September is National Childhood Cancer Awareness Month – a time to reflect on how we can better understand and address the needs of children affected by cancer, as well as their families and caregivers.

While September typically heralds the excitement of back-to-school shopping, reuniting with friends, and gearing up for a new academic year, the reality is starkly different for families grappling with a childhood cancer diagnosis.

In BC alone, 155 children are diagnosed with cancer each year, with over 100 of them forced to swap classrooms for chemotherapy treatments. Though BC Children’s Hospital boasts the highest survival rate for childhood cancers in Canada, the devastating truth remains: 1 in 5 children tragically lose their battle.

In the fight against childhood cancer, early detection and treatment are vital for improving survival rates. Parents, caregivers, extended family members, and healthcare professionals all play a crucial part in recognizing the signs and symptoms early on.

What is World Alzheimers Month?

World Alzheimer’s Month takes place every September and World Alzheimer’s Day is on 21 September each year.

Each September, people unite from all corners of the world to raise awareness and to challenge the stigma that persists around Alzheimer’s disease and all types of dementia.

There are over 10 million new cases of dementia each year worldwide, implying one new case every 3.2 seconds.

The global awareness raising campaign focusses on attitudes toward dementia and seeks to redress stigma and discrimination which still exists around the condition, while highlighting the positive steps being undertaken by organisations and governments globally to develop a more dementia friendly society.

Did you know…

- Worldwide, 69 million people sustain a traumatic brain injury every year. Over 1.5 million Canadians are living with acquired brain injury, stemming from traumatic impact, stroke, suffocation and other conditions.

- Every 5 minutes, someone in Canada has a stroke.

- 1 in 4 people accessing mental health and substance use services have a history of brain injury.

- People with brain injury are 2.5x more likely to be incarcerated.

- Up to 82% of people experiencing homelessness have a traumatic brain injury.

A traumatic brain injury (TBI) differs significantly from injuries such as broken bones or torn ligaments, which exhibit a finite healing process over time. Instead, TBI presents as a chronic neurological condition characterized by its potential for long-term persistence. The effects of brain injury are often unseen by others, yet they profoundly affect the lives of those who have suffered the injury, and their loved ones.

How we can help

Our psychologists help people navigate the complexities of concussion & brain injury, whether it’s acquired from a motor vehicle injury, a workplace injury, stroke or other conditions.

After a brain injury, life will be permanently altered. Adapting to the resulting challenges and changes becomes essential. This includes alterations to your independence, abilities, work, and relationships with family, friends, and caregivers.

Adjusting to what is often called the “new normal” will take time.

Cognitive-behavioral therapy (CBT) and Acceptance and commitment therapy ( ACT), are found to be effective interventions when treating TBI.

Therapy can help you adapt to the mental and physical problems caused by TBI.

Are you curious to hear more about how we can help you?

Whatever you want achieve through therapy our caring team is ready to help.

Call us ~ (1) 778 353 2553 or submit a contact form link ( below)